TL;DR: AI makes the good ones faster, attacks cheaper and the impact more precise. Also, AI is currently implemented into attack-tools, which means no attackvectors will remain hidden, leading to an sharp increase of successrates.

Buckle up, BlueTeam

Intro + Scope

This article will focus on the part where AI is used in attacks or used to support attackers in the real world already. And while there is no fancy attacking AI (yet ... wink wink, Chrome & Wintermute), AI shapes and will reshape the battleground for cyberattacks completely new.

Code Red Team

So ... what is "out there"?

First of all, offensive AI is advancing faster than defensive AI. It has fewer constraints, less integration issues, less compliance-overhead. Any smart guy who understands the potential of AgenticAI connected to all datasource that are out there, can build something like the perfect looking-glass into any kind of attack surface.

That is exactly, what we at zeroBS currently are doing. We already train an AgenticAI (Projec Xone) to assist us in crafting attack-vectors against any targets by using traditional recon-data that gets fetched by Xone who then executes analysis and shows us potential attackvectors, based on what is already trained so far. And if there is a new system the model doesnt know yet, it prompts for inpout, so we can feed that information back as part of supervised learning. Thanks to MCP, there is hardly any limit on which datasource we can feed into the system.

Here is how it works:

RAW Phase

- input: target, that can be a domain, network, AS

- use all sources we provide (shodan, servicediscovery, networkanalysis, bgp/peering-info, webtracer, apifinder, ip/geotracing etc)

- find all valid and possible attack-vectors,

- give each attack-vector a DRS - score, based on detected mitigation, meaning: how hard is it probably, to attack via that route for impact

- analyse possible impacts and sideffects

- put everything in a nice sortable matrix so we can see what targets we can attack on network or application level

BBQ Phase

- input: scope / objectives / what we want to achieve

- re-consider attacks-vectors based on the objective

example: there is a target we need to attack who operates their own AS/Network and datacenter. Xone then gets this information and does a classic recon, tries to find volumetric protection, firewalls, WAFs, etc; all systems are visible. And through some traceroute-magic it even sometimes finds the central firewall, which is always a very good target.

in the 2nd phase we tell the system what our objectives are, e.g. take down an app or the whole system, of find a stealthy attack vector. lets say we want to take down a whole cluster of apps, and Xone identifies Akamai as volumetric protection, it might then suggest, not to leverage a full volumetric attack, but to attack an identified central Firewall with 100mbit spoofed IPs, because the AIgent knows that the Firewall has problems with that attack. Bonus: those attack doesnt generate much traces: you need to know what to look for and also have an excellent monitoring setup to identify the attack.

Or maybe Xone finds that certain API-endpoints are not cached, thus can be attacked because no caching means always compute, which is what an attacker aims for.

The difference: What took a skilled senior 2/3 days of analysis, can now be achieved by a junior with a prompt-input, with the results beeing delivered after 1-2 hrs. And the result is always high-quality, doesnt rely on the daily form or workload of the consultant.

With such systems, no attack vector remains hidden

But is it just an halluzination from our side?

Well, unfortunately not. Usage of AI-tools to support attacks in different stages are already visible.

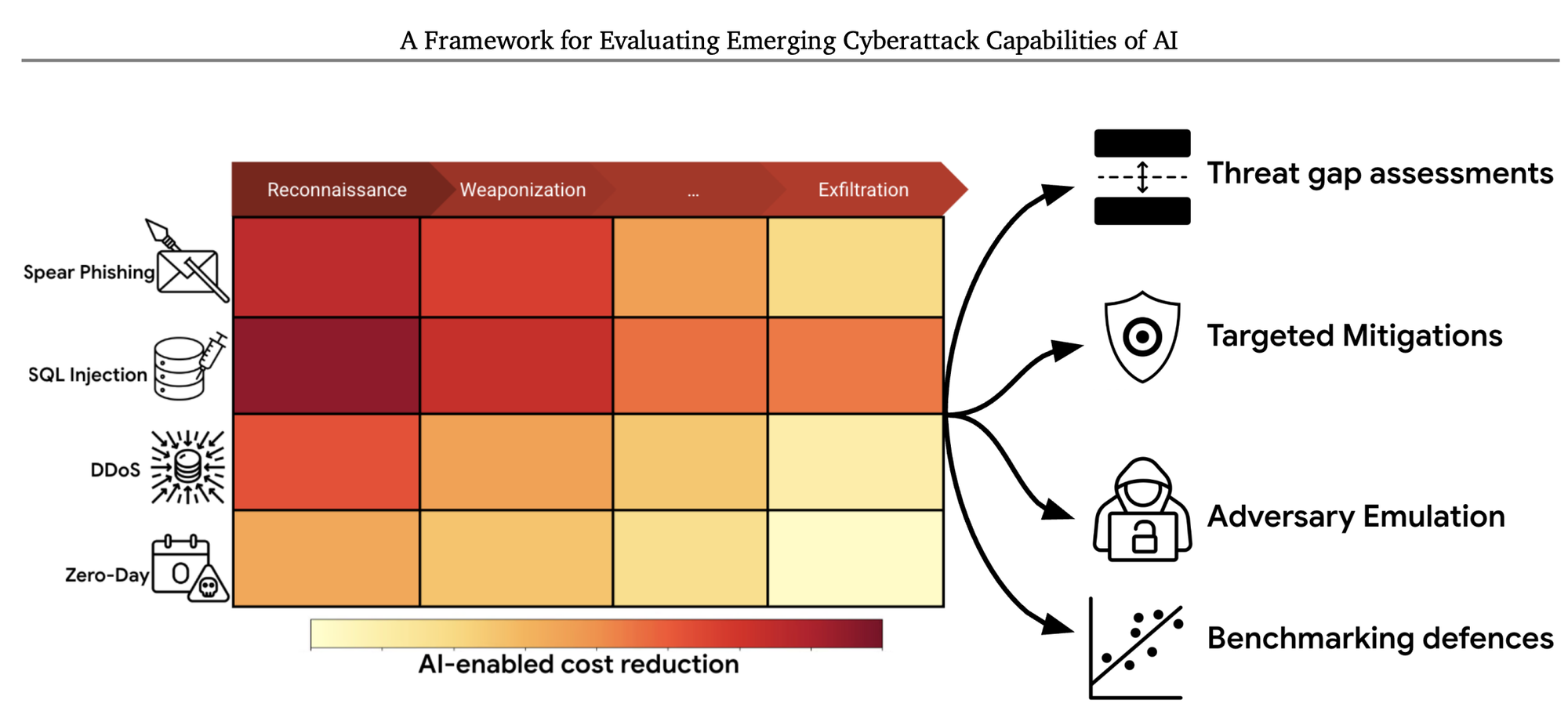

Google run some test and found exact numbers to what was a gut-feeling from our side: AI can speed up attacks and reduce costs in all stages of an attack.

Google confirms what we internally see as well, how AgenticAI can reduce costs on the attacker-side; you better think of "reduce costs" as "faster and with better impact".

Butter bei die Fische: Real World Examples

Beside all that ChitChat about potential, there is already Offensive AI in use to be seen ITW. Furthermore, attack-tools are getting equipped with AI-capabilities

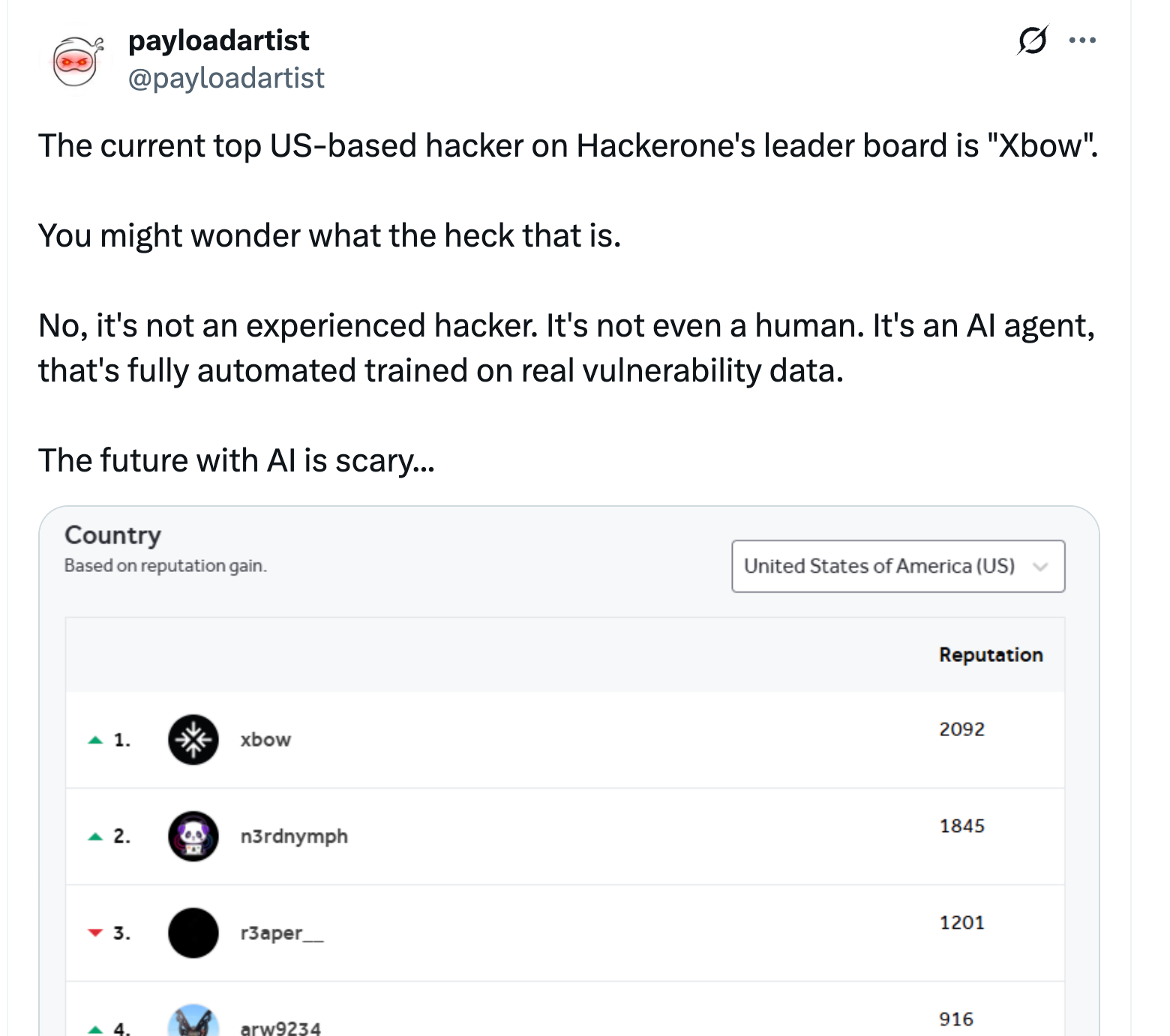

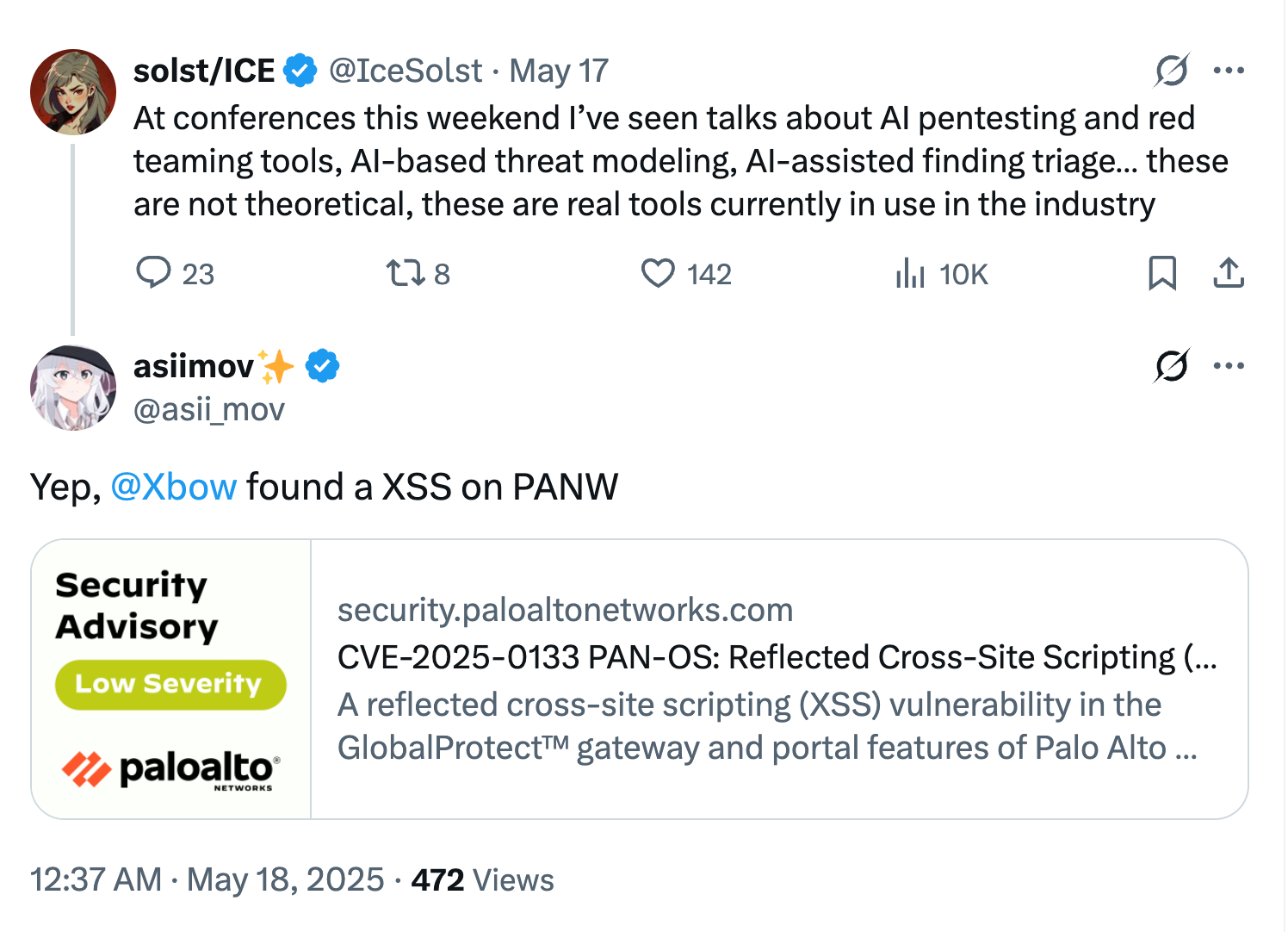

Xbow

- Xbow is an agentic AI that performs autonomous pentesting

- as of 2025-06-12, it leads the scoreboard of Hackerone

- ref: https://x.com/payloadartist/status/1932879605980885306

- ref: https://x.com/Xbow/status/1937512662859981116

Akira Botnet

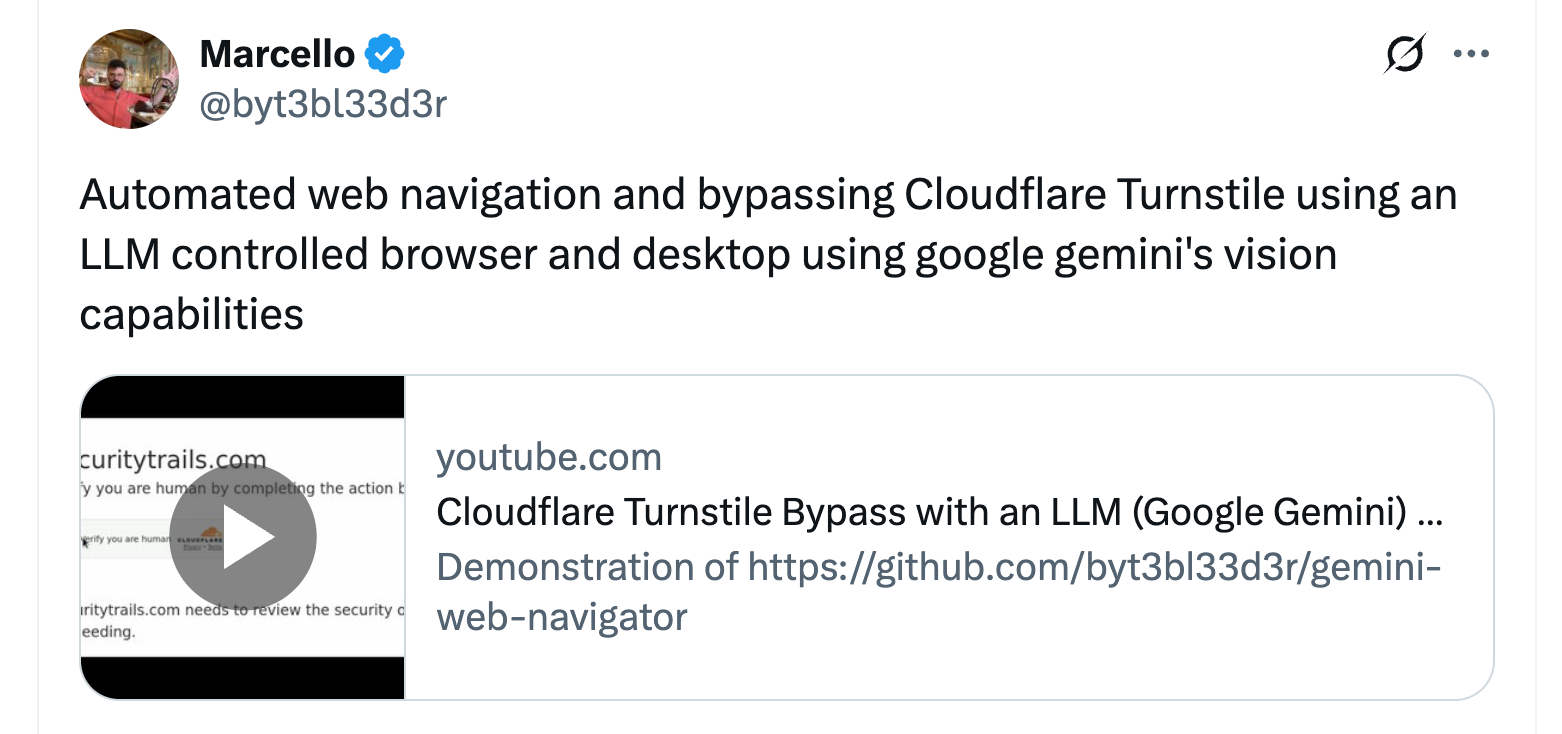

SentinelOne had observed a botnet (AkiraBot) that used AI to bypass Captcha and spammed over 400.000 sites/forums.

AkiraBot puts significant emphasis on evading CAPTCHAs so that it can spam websites at scale. The targeted CAPTCHA services include hCAPTCHA and reCAPTCHA, including Cloudflare’s hCAPTCHA service in certain versions of the tool.

Browser-integrations

AI-usage by Actors

Google observed different Actors (ab)using Gemini in support of different stages of their operation

- Threat actors are experimenting with Gemini to enable their operations, finding productivity gains but not yet developing novel capabilities. At present, they primarily use AI for research, troubleshooting code, and creating and localizing content.

- APT actors used Gemini to support several phases of the attack lifecycle, including researching potential infrastructure and free hosting providers, reconnaissance on target organizations, research into vulnerabilities, payload development, and assistance with malicious scripting and evasion techniques

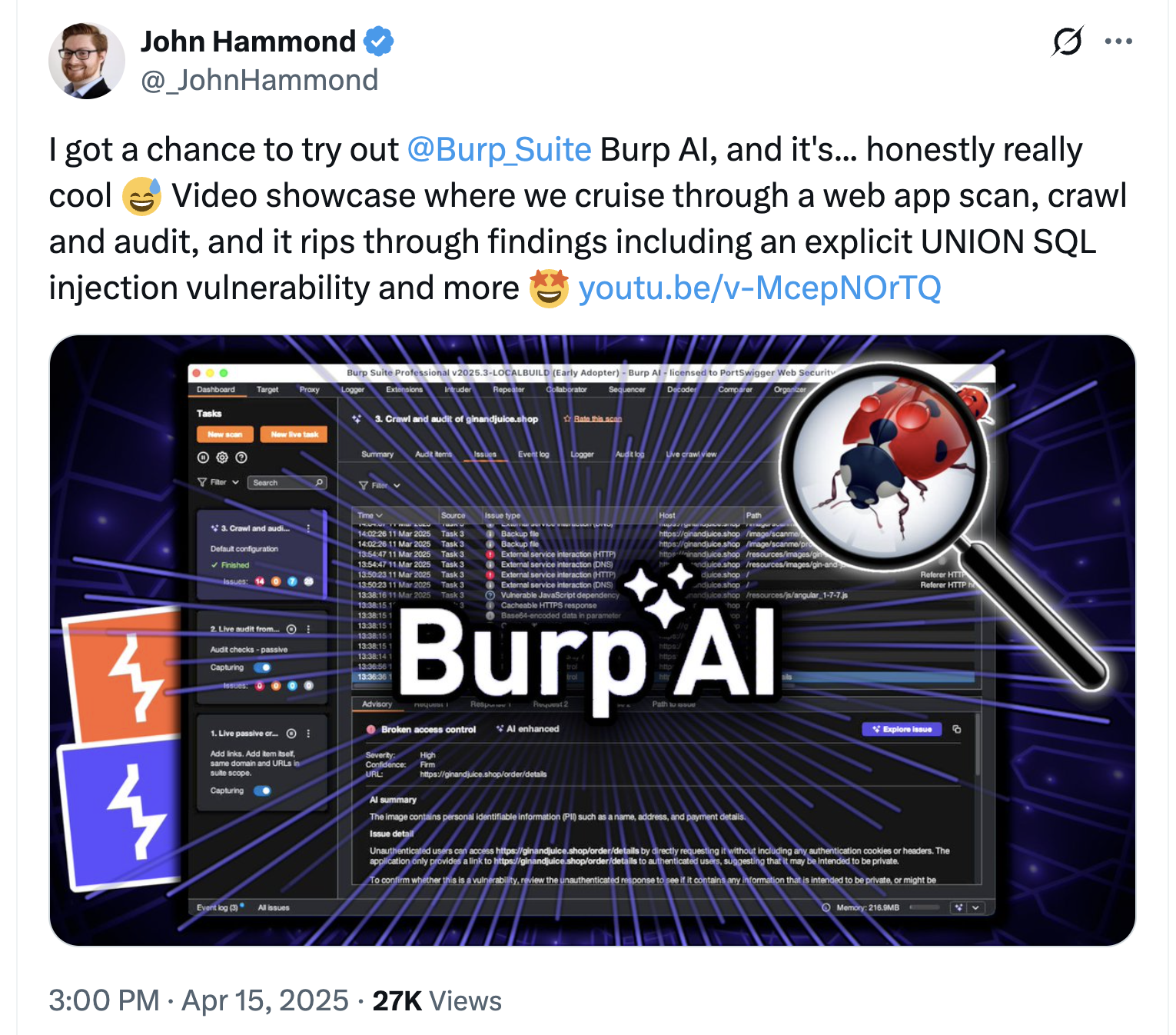

Burp

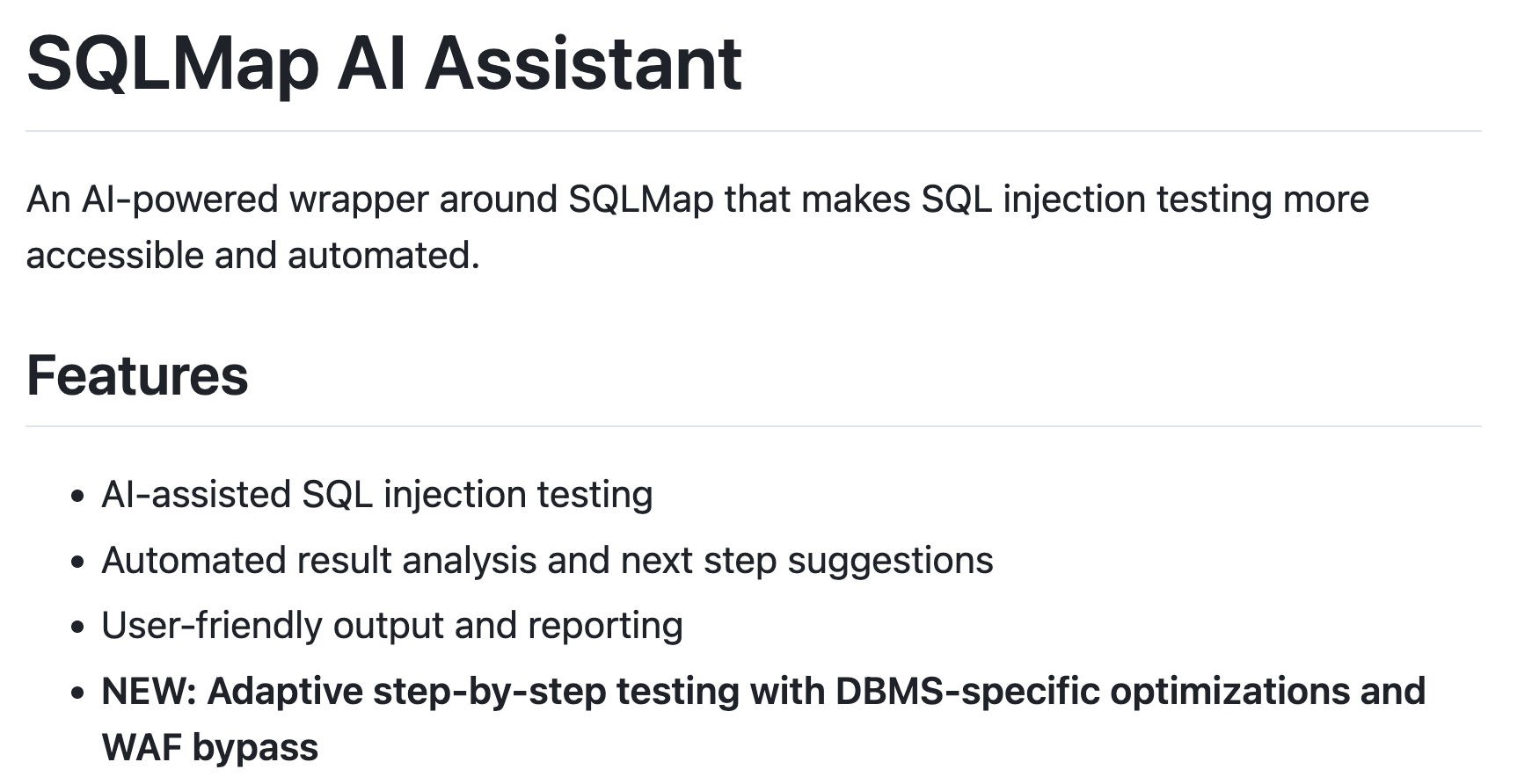

SLQMap

a widely used Tool for SQLInjections, became recently an AI-Intergation, allowing also unexperienced actores to find any SQLinjection easily

Automated Bughunting by AI

there is already AI-based bughunting

collected offensive AI approaches and projects

- evilsocket's Operator https://x.com/evilsocket/status/1945175506812317901

(evilsocket also bilds nerve, an agentic AI framework of great use)

LLM Combined with an attack-framework, research by Anthropic

- Large Language Models (LLMs) that are not fine-tuned for cybersecurity can succeed in multistage attacks on networks with dozens of hosts when equipped with a novel toolkit. This shows one pathway by which LLMs could reduce barriers to entry for complex cyber attacks while also automating current cyber defensive workflows.These results show how LLMs could lower the barriers to conducting complex cyber attacks, underscoring the importance of investing in research into LLM capabilities for both attack and defense.

https://red.anthropic.com/2025/cyber-toolkits/

Wrapup

AI is already widely used; not to craft new attacks, but to support current operations to deliver attacks with a better impact, faster.

- Reconnaissance: AI can analyze vast datasets—such as network traffic patterns or public system information—to identify high-value or vulnerable targets. Machine learning models could predict which systems are most susceptible to disruption based on historical data or real-time analysis.

- Weaponization: AI can assist in crafting more sophisticated malware for botnets, such as bots that evade detection by adapting their behavior or signatures dynamically.

- Delivery: AI can optimize the timing, volume, and distribution of malicious traffic. For example, it could coordinate botnet activity to launch synchronized bursts that maximize resource saturation with minimal effort.

- Adaptation (Actions on Objectives): Through techniques like reinforcement learning, AI can adjust attack strategies in real-time—altering traffic patterns or intensity based on the target’s defensive responses—to maintain disruption.

as one of our colleagues formulated it:

up to now we had soldiers and weapons on the battleground. The current state of AI-development introduces Exoskeletons for the soldiers; if you dont keep up, they will overrun you.

so, full circle to the start:

It will be a fun ride.

References

- NIST: Adversarial Machine Learning: A Taxonomy and Terminology of Attacks and Mitigations

https://nvlpubs.nist.gov/nistpubs/ai/NIST.AI.100-2e2025.pdf - Google: Adversarial Misuse of Generative AI https://cloud.google.com/blog/topics/threat-intelligence/adversarial-misuse-generative-ai

- Anthropic: Detecting and Countering Malicious Uses of Claude: March 2025 https://www.anthropic.com/news/detecting-and-countering-malicious-uses-of-claude-march-2025

- OpenAI: Disrupting malicious uses of AI: June 2025

https://cdn.openai.com/threat-intelligence-reports/5f73af09-a3a3-4a55-992e-069237681620/disrupting-malicious-uses-of-ai-june-2025.pdf - Google: A Framework for Evaluating Emerging Cyberattack Capabilities of AI

https://arxiv.org/pdf/2503.11917 - SentinelOne: AkiraBot | AI-Powered Bot Bypasses CAPTCHAs, Spams Websites At Scale

https://www.sentinelone.com/labs/akirabot-ai-powered-bot-bypasses-captchas-spams-websites-at-scale/

continuous watch / later additions:

- AI assisted Recon

https://x.com/ReconVillage/status/1941712970960281822

Member discussion: